If you haven’t yet heard about the latest player in the AI space – DeepSeek – then it’s time to familiarise yourself with what’s been touted as the AI chatbot that will surpass ChatGPT.

While some reports herald DeepSeek as a powerful AI tool built with more cost-effective technology than ChatGPT, serious security concerns have led governments worldwide – including Australia’s – to ban its use on official devices.

Here’s what you need to know.

Is DeepSeek more advanced than ChatGPT?

DeepSeek has reportedly been developed using more advanced technology than OpenAI’s ChatGPT, but can we really trust these claims? While it may offer powerful features, much of the discussion around its superiority is speculation. AI models are complex, and factors such as data sources, infrastructure, and ongoing development play a crucial role in their effectiveness.

The bigger concern isn’t necessarily whether DeepSeek is more powerful, but rather the risks that it could pose to the users and businesses that engage with it.

Major security risks: What’s at stake?

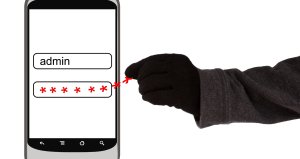

One of the most alarming aspects of DeepSeek is its reported request for root/administrator-level access to your devices. This is a considerably large red flag as it means that the app could potentially access everything on your device. Think files, emails, passwords, sensitive business data – the list goes on.

All of the data captured by DeepSeek is stored in China based data centres, which means your potentially sensitive data is being moved beyond Australian borders to a country with recognised involvement in cyber attacks against western countries.

Additionally, under Chinese legislation, the Chinese government has the authority to access any data collected by Chinese-based organisations. This means that anything DeepSeek has access to could – in theory – be accessed by Chinese authorities.

Many governments around the world have already acted on these concerns. The Australian government is among them, with DeepSeek banned from use on government devices to mitigate potential security breaches.

What data does DeepSeek collect?

Even if DeepSeek can’t gain full access to your device, its own privacy policy states that it collects a large amount of information from users, including:

- Email Address

- Phone Number

- Date of Birth

- All user input – including text and audio

- Technical information – including your device model, operating system, IP address, and even keystroke patterns (which could potentially include passwords)

For business owners, this raises major concerns, particularly when employees may be using AI tools on work devices. If a staff member inputs sensitive customer or business data into DeepSeek (and 11% of the data they paste into ChatGPT is considered ‘sensitive’), that information could be stored indefinitely on overseas servers without your knowledge.

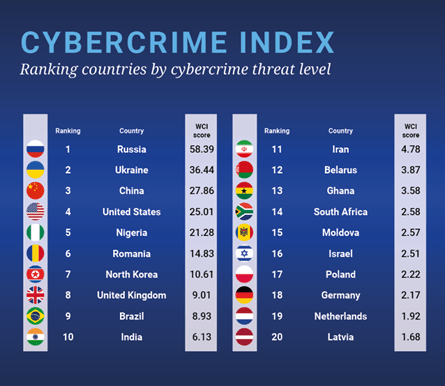

This is also a concern as Chinese-based servers are among the most targeted by cyber criminals worldwide:

Image Source: https://www.ox.ac.uk/news/2024-04-10-world-first-cybercrime-index-ranks-countries-cybercrime-threat-level

AI tools and business policies

Whether you accept it or not, AI tools are already being used in your business, in fact some 68% of employees using generative AI did not tell their employer. Simply blocking access to them on work devices isn’t enough as staff can still access these tools on their personal devices.

So, what can you do?

1. Establish an AI usage policy

If your company does not already have an AI tool usage policy, now is the time to create one. This framework should define the operational limits and protective measures required for the safe deployment of AI. Ideally this would outline:

- Which AI tools staff are and aren’t allowed to use.

- The types of data which can and cannot be input into AI tools.

- A commitment to meet all relevant regulatory requirements relating to your industry – particularly important in certain fields such as health or aged care, NDIS, education, and so on.

- Clear links to your other policies including privacy, security, quality, and so on.

2. Implement security controls

Ensure that your business has appropriate technical controls in place to restrict the use of unauthorised AI tools on company devices. This could include:

- Blocking access to banned AI tools.

- Monitoring AI tool usage within the company network.

- Regularly educating staff on the risks of inputting sensitive data into AI platforms.

3. Regular training & communication

Even the best policies are ineffective if staff aren’t aware of them. We recommend:

- Conduct regular training sessions on AI and other cyber security risks.

- Send reminders about what tools are approved and which ones are not.

- Encourage employees to report any security concerns related to AI usage.

DeepSeek may seem like an exciting new AI tool that you may wish to try in your business, but the security and privacy risks far outweigh any potential benefits. With its ability to collect vast amounts of data and storing it, it’s crucial for companies to take AI seriously.

By implementing a clear AI usage policy, enforcing strict security controls, and educating staff, business leaders can protect their companies from unnecessary risks. In a world where AI is becoming an essential tool, staying informed and proactive is the best defence.